Speaker

Description

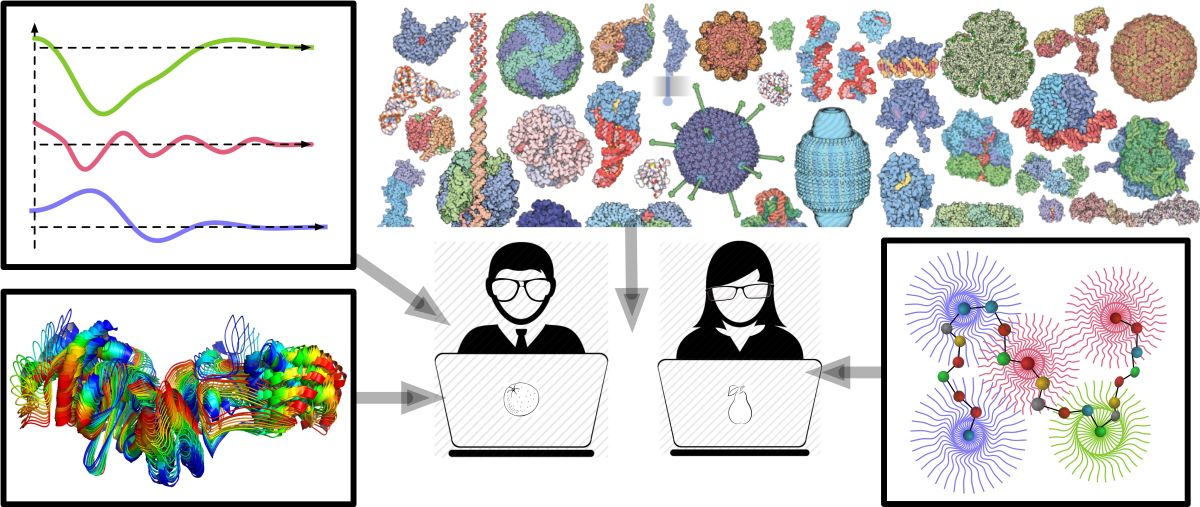

Traditional protein-protein docking algorithms often rely on extensive candidate sampling and subsequent re-ranking. However, these steps are time-consuming, limiting their applicability in scenarios requiring high-throughput complex structure prediction, such as structure-based virtual screening. The advent of deep learning methods has brought acceleration to the docking process, leveraging the power of modern GPUs. Despite this acceleration, a challenge persists in the limited generalization of docking accuracy beyond the training data. Deep learning approaches in protein-protein docking can be broadly classified as either discriminative or generative. Discriminative models establish a functional mapping from input (sequences and structures of docking partners) to output (docked protein complex structure). In contrast, generative models learn the inherent distribution of a given dataset (docked structures) and generate novel and realistic samples that exhibit similarities to the original data. This study compares two representative models from our lab – GeoDock, a discriminative-type model, and DockDiffusion, a generative-type model – for protein docking. Utilizing identical training and testing datasets, along with similar neural network architectures, we compare the generalizability, docking accuracy, and inference speed of both methods. The results suggest that while discriminative models like GeoDock can accurately map input features to output structures within the training data, their performance may decline when faced with unseen protein interactions. On the other hand, generative models like DockDiffusion, by learning the underlying distribution of docked structures, exhibit a higher potential for generalization to diverse protein interfaces.

| Submitting to: | Integrative Computational Biology workshop |

|---|